Published on 25 August 2023

A foundation model for airborne imagery

Aerial imagery are used as primary data sources to identify these changes in the built environment and with the remarkable advancements in AI technology, the possibilities for automatic change detection have increased tremendously. By improving services like the GRB, policy makers can easily access up-to-date and reliable data which are crucial for urban planning, resource management, and sustainable growth. In collaboration with the GRB and EODaS teams of Digital Flanders, we embarked on an exciting journey to develop a change detection algorithm specifically tailored for airborne imagery.

DINO1 is a self-supervised method for training a vision transformer model (ViT) developed by Meta AI Research to process image and video data. Unlike large language models, DINO is tailored for visual tasks like classification, retrieval, understanding, and segmentation. Pretrained without supervision on a diverse image dataset, DINO's versatile features perform well on new data without extra tuning.

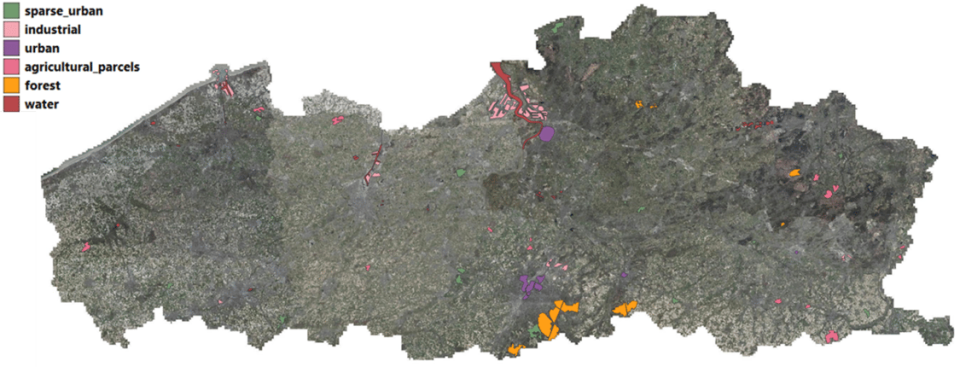

VITO's Remote Sensing team adapted the original DINO model architecture to multispectral data and trained a customized foundation model from the ground up, meticulously curating a diverse dataset of VHR airborne imagery tiles over Flanders. The dataset's variety and multi-year coverage results in effective generalization ensuring accurate and strong change detection results.

Heterogeneous dataset for the unsupervised training of a foundation model for Flanders.

Achieving precision in built environment monitoring

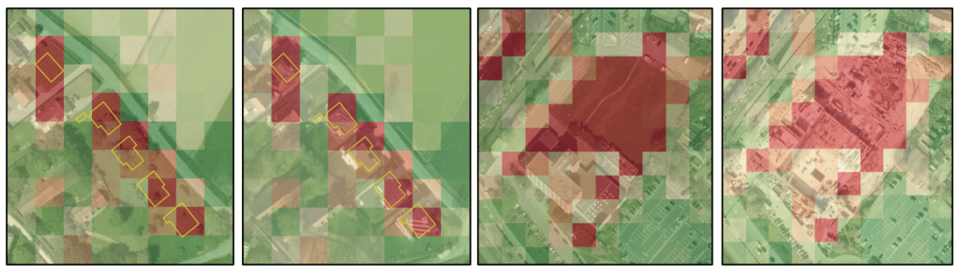

In their pursuit of capturing changes in the built environment at a suitable granularity, the team carefully selected a tile size of 16mx16m on which change detection and classification is performed. Why? This choice gives the perfect balance, offering sufficient detail for typical residential buildings while encompassing the contextual elements of the surroundings, such as gardens and road infrastructure. Leveraging the customized model, they are able to generate highly informative features that correspond to the semantic content of each individual tile. This approach allows them to group similar tiles together in a powerful feature space, forming the backbone of their change detection process. By applying the model and calculating a similarity metric on successive years of airborne imagery, the team can create dynamic change maps (see figure below) which reveal the evolving urban landscape in high detail.

Example of change detection between successive years.

Change detection beyond built environment

The strength of the model lies in its strong representation power and the fact that the team can train it fully unsupervised, without any explicit ground truth provided. This unique capability enables them to capture changes not only within a built environment but also across a broader landscape. It even allows them to gain valuable insights into environmental changes and land use patterns that extend beyond urban areas.