Image processing

Visual interpretation

Every picture needs an interpreter

In the very beginning, when remote sensing had only aerial photographs to work with, digital images and computerised classification schemes were not an option. The photographs were analysed by experienced interpreters who used overlays and coloured pencils to extract from them a considerable amount of information.

Whilst the first satellite images were also photographs, they were very quickly replaced by digital images and corresponding methods for analysing them, relying on powerful computers, were devised concomitantly. This period was marked by the parallel development of two processing methods, namely, analogue (that is, non-digital) aerial photographs that were interpreted visually, and digital satellite images that were processed by computers. Actually, this separation is completely artificial, for digital satellite images can be interpreted visually just as aerial photographs can be digitised and processed by computers.

The main difference between the two approaches is obvious. Digital classification methods assign a type of covering material to each pixel in the image in line with its s pectral signature. In the case of visual interpretation, the operators base their analyses on the objects’ colours (i.e., also on their spectral signatures), but these also (and above all) allow for the objects’ shapes and sizes and their positions to be considered in relation to each other in the picture.

pectral signature. In the case of visual interpretation, the operators base their analyses on the objects’ colours (i.e., also on their spectral signatures), but these also (and above all) allow for the objects’ shapes and sizes and their positions to be considered in relation to each other in the picture.

Whilst it is normal in digital supervised classification to mistake tarred roofing for the asphalt of a road, such a mix-up is inconceivable in visual interpretation, given the objects’ very different shapes!

What is more, whereas the supervised classification of spectral signatures must be limited to recognising different types of cover, visual interpretation can go much farther and identify, for example, the functions of specific buildings, agricultural practices, the transport and roadways networks’ structures, etc.

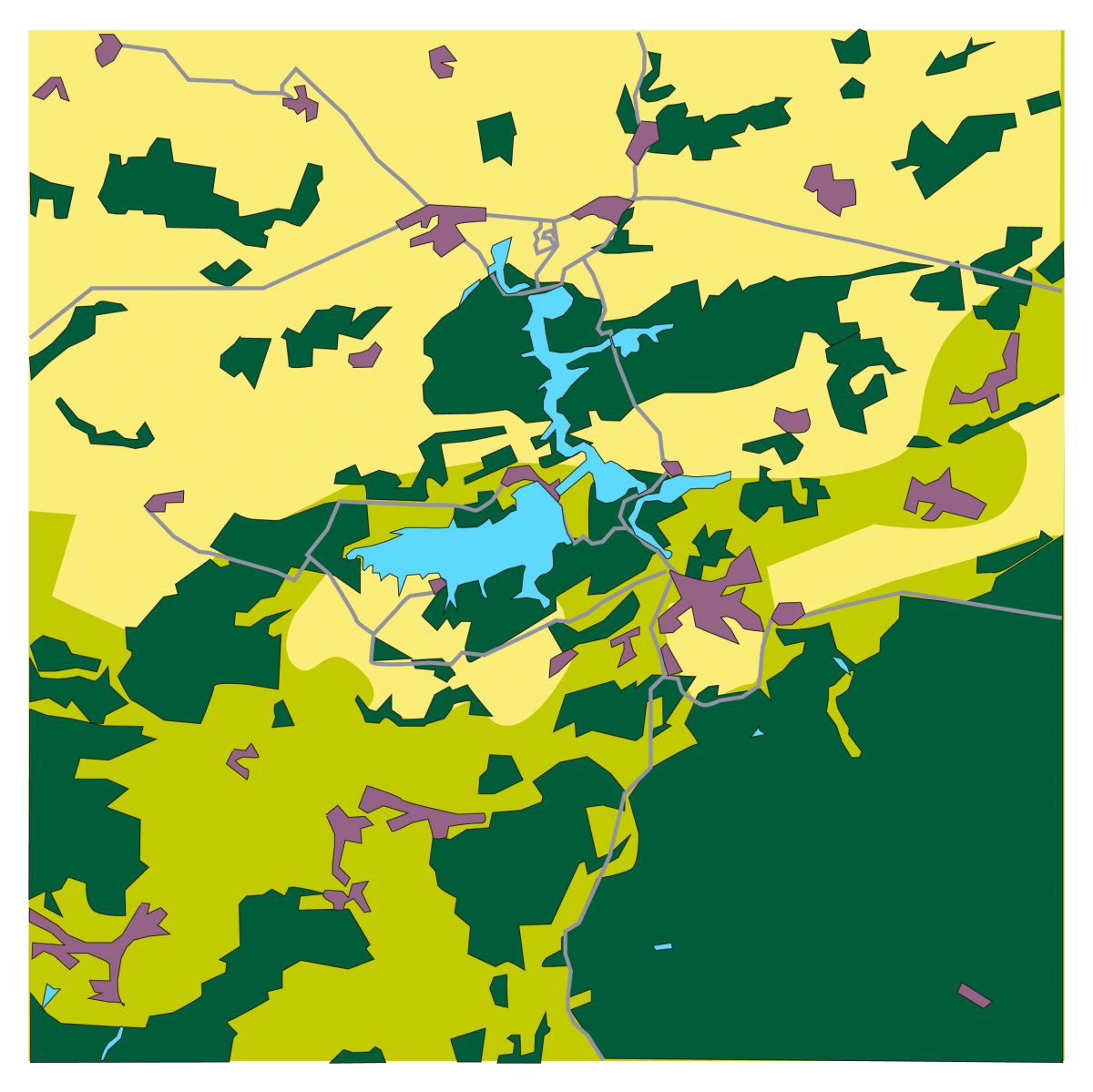

In this interpretation of the image of the Eau d’Heure lakes we have circumscribed the various forests rather accurately and distinguished between bare ground used for farming and urban areas. In contrast, we deliberately opted for a very crude classification of the cultivated areas, having decided to distinguish only between the areas in the image where fields cover the majority of the land (in yellow) and the areas where grasslands predominate (in light green). These results are rather different from those that are obtained by digital classification on the pixel level, but usually are better suited to the users’ needs. The two approaches can obviously be combined. For example, the ‘natural’ uses may be classified by supervised classification and the built-up areas, road network, and other such structures extracted by visual interpretation.

The main drawback of photo-interpretation is its fastidiousness. What is more, to be effective it must be done by highly experienced interpreters. Over the last few years it has become possible to use the computer’s great potential to perform computer-aided photo-interpretation. On the other hand, some of the concepts used in visual interpretation (shape recognition, texture analysis, etc.) are starting to be taken up by computerised image-processing programmes. However, these require considerable computational power and as yet have yielded only partial results.