2.2- Main types of instruments

Passive instruments

Passive, imaging sensors use naturally occurring light to image objects. They therefore have no light source of their own (see Characteristics of instruments - Passive and active sensors). Passive sensors make observations in different parts of the spectrum, but are mainly optical. When we talk about the optical part of the electromagnetic spectrum, we mean the radiation between 0.3 and 14 micrometres. So this includes ultraviolet, visible and infrared light. We call that part of the spectrum "optical" because lenses and mirrors can refract or reflect this energy.

Commonly used optical instruments are the so-called "multispectral scanners". We find them on board satellite platforms such as Landsat, MODIS, Sentinel-2&3, SPOT, Pléiades, Worldview,... but they are also often used for digital aerial imaging. They have a relatively limited number of, rather wide spectral channels: usually at least one blue (Blue), green (Green), red (Red) and near-infrared (Near InfraRed - NIR) spectral channel. With these, you can make true colour images (R-G-B) or false colour composites (NIR-R-G e.g.).

The MSI (Multi-Spectral Imager) instrument on board Sentinel-2, OLCI (Ocean and Land Colour Instrument) of Sentinel-3 and the OLI-2 (Operational Land Imager) of Landsat-9 also contain some additional channels in other parts of the near-infrared or short-wave infrared (Short-Wave InfraRed - SWIR). Landsat satellites and Sentinel-3 also contain instruments (TIRS and SLSTR, respectively) that can observe in the Thermal InfraRed (TIR).

There are also hyperspectral instruments that, unlike multispectral sensors, can make observations in a very large number of relatively narrow spectral bands. Examples include AVIRIS (Airborne Visible / Infrared Imaging Spectrometer) with 224 contiguous spectral channels in a wavelength range between 400 and 2500 nanometres or APEX (Airborne Prism Experiment, developed by a Belgian-Swiss consortium) with around 300 channels and the same wavelength range.

Although these specific instruments are used exclusively on board aircraft, hyperspectral sensors are also placed in satellites. An example is the German hyperspectral EnMap satellite (Environmental Mapping and Analysis Program) whose characteristics are similar in terms of wavelengths and number of channels, but the images will have less spatial detail than aerial images.

Finally, there are also passive sensors that can detect radiation in the microwave range emitted by the Earth itself. However, the energy level of these is so low that this radiation needs to be collected over a larger area and thus the data offer little spatial detail. Such instruments are used for long-term observation of sea ice, determining changes in soil moisture content or sea surface salinity. Examples are AMSR (Advanced Microwave Scanning Radiometer-Earth Observing System) or the MIRAS instrument (Microwave Imaging Radiometer with Aperture Synthesis) used in ESA's Soil Moisture and Ocean Salinity (SMOS) mission.

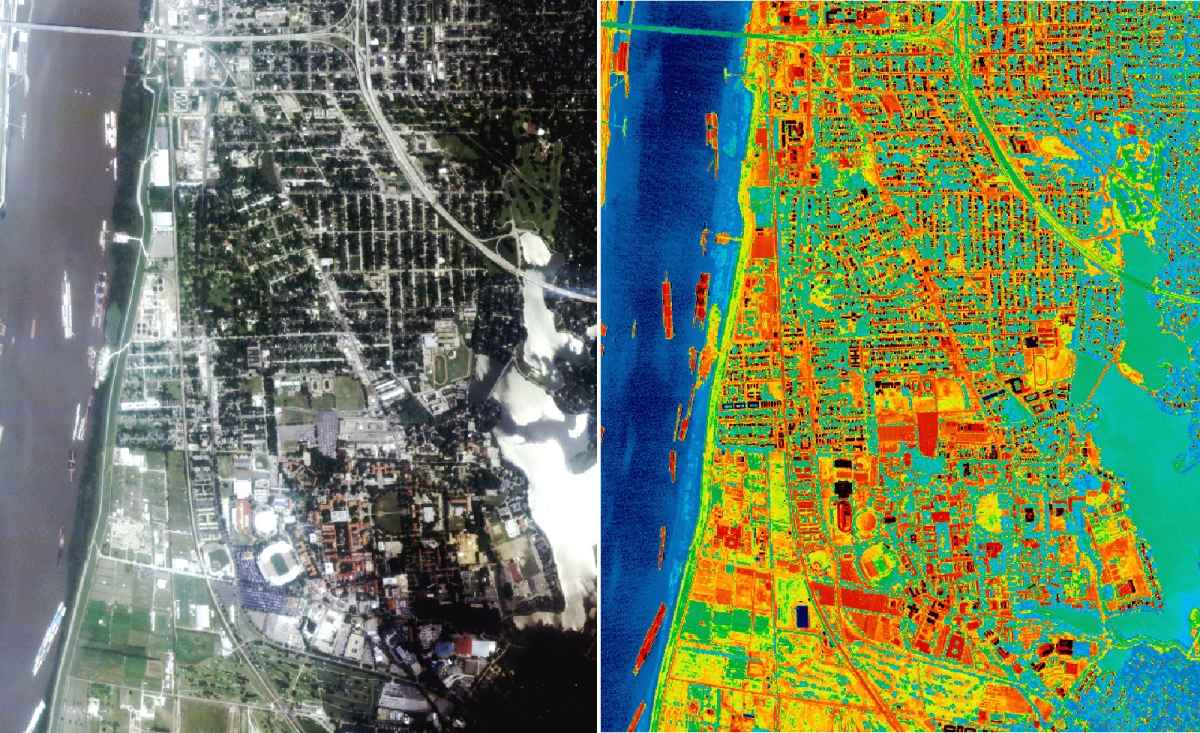

RGV aerial photo (left) and thermal infrared photo (right) of Baton Rouge, Los Angeles. Yellow and red areas are warm and generally correspond to roads and buildings; the blue and green areas are cold and correspond to water and vegetation. In this image, the bright red areas have a temperature of around 65°C; the dark green and blue areas are around 25°C. The blue strip on the left is the Mississippi River. Courtesy of NASA-Marshall Space Flight Center-Global Hydrology and Climate.

A sensor consisting of detectors sensitive to thermal infrared radiation records the highest values in the warmest areas. With a pixel range of 0 (black) to 255 (white), the warm areas are clearly displayed. One can of course choose a different colour scale. In the example, warmer areas are shown in red and colder ones in blue.

Active sensors: Radar & LiDAR

An active sensor sends out an electromagnetic signal to "illuminate" the terrain. The objects on the surface interact with it and, depending on their physical properties, reflect some of it back to the sensor, which records the observation. Active sensors are thus not dependent on sunlight and weather conditions, and they can operate 24 hours a day (see Radiation sources). Active sensors most commonly used in remote sensing are SAR (Synthetic Aperture Radar) or LiDAR (Light Detection and Ranging).

RADAR

RADAR stands for RAdio Detection And Ranging. The technology was developed around World War II and is used to determine the distance, angle or speed of aircraft, vessels and the like based on radio or microwaves. The imaging radars used in remote sensing (SAR) operate in the microwave part of the electromagnetic spectrum (wavelength from 1 mm to 1 metre), beyond the visible and thermal infrared regions.

So the area we want to take an image of is illuminated not by light but by microwave beams. Microwaves interact with objects on the Earth's surface in a different way from visible or infrared light. The strength of the returning signal compared to the transmitted signal (called backscatter) depends mainly on the roughness, shape and dielectric properties (electromagnetic permittivity) of the observed object as well as on the properties of the SAR system itself. Microwave images contain a lot of useful information about the soil cover and moisture content, but are more difficult to interpret than optical images.

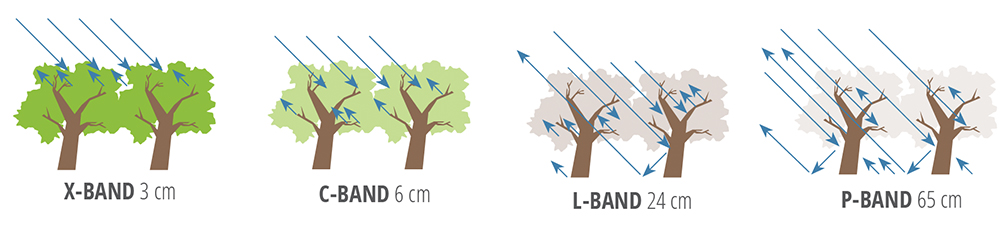

The wavelength used in the radar system determines how deep the signal can penetrate the foliage. Shorter waves interact mainly with the individual leaves while longer waves can penetrate down to the ground. Source: NASA SAR Handbook

SAR sensors on board aircraft or satellites are radars with a synthetic antenna aperture angle: the radar antenna moves with the platform over the target area as it is illuminated by the microwaves. This creates an artificially large antenna that can be used to obtain images with much greater spatial detail than would be possible with an equally large but stationary physical antenna.

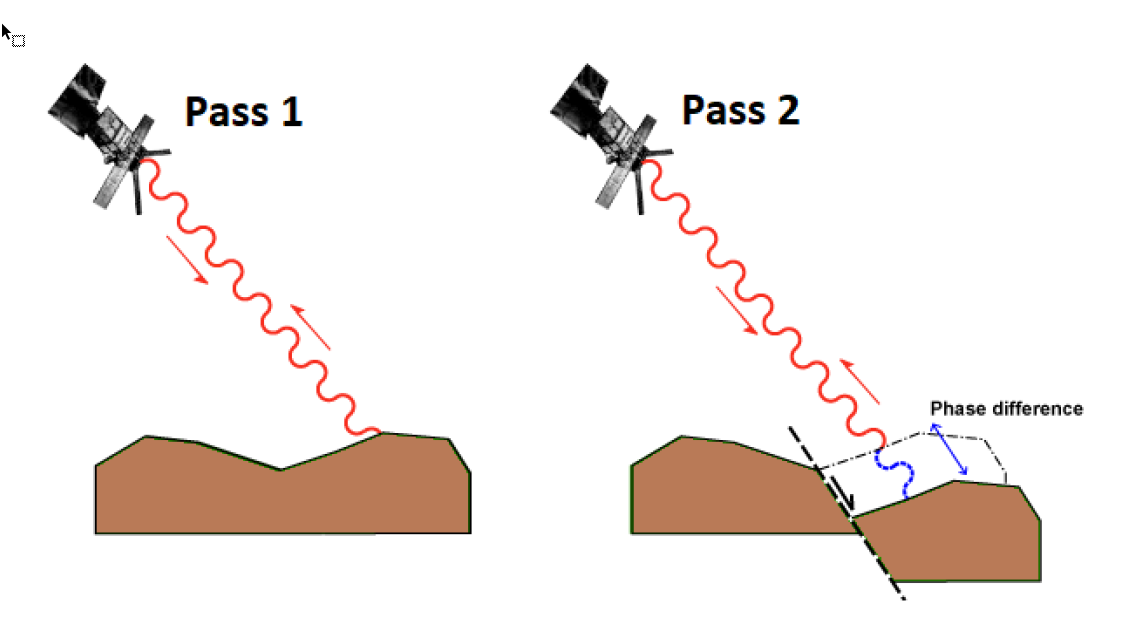

Two SAR images taken from slightly different viewing angles can also form a so-called interferometric pair. The phase differences between the two returned signals can then be used to create digital elevation maps, to observe terrain changes or to further increase the resolution in the antenna viewing direction.

Principle of SAR interferometry (InSAR): by determining the phase difference between two radar images, changes in distance between the ground and the satellite can be determined. Source: Castellazzi et al. (2020). Ground displacements in the Lower Namoi region. 10.13140/RG.2.2.20466.53442

|

Unlike passive sensors in the optical range, which measure light separately in multiple intervals of the spectrum, SAR sensors typically operate only in a single spectral wavelength. For example, the European Sentinel-1 satellite operates around a central wavelength of rounded 5.55 cm in a range called the C-band (3.8 cm - 7.5cm). SAR sensors that operate in the K band (1.1 cm - 1.7cm) such as COSMO-SkyMed are suitable for detailed mapping because they provide images with great spatial resolution. On the other hand, the signal they transmit does degrade faster in areas with a lot of vegetation as it has little ability to penetrate the foliage. The PALSAR2 sensor on board the Japanese ALOS2 satellite, on the other hand, works with longer wavelengths in the L-band (15cm - 30cm) just because the images are more coherent over time, especially in areas with lots of vegetation. Their spatial detail, on the other hand, is a lot lower. |

LiDAR

Another example of an active sensor is LiDAR. The name is an acronym for "Light (or Laser Imaging) Detection And Ranging" and is used as an umbrella term for detecting and determining the distance to an object using laser light.

It is an active technique within remote sensing that uses light in the infrared, visible or UV wavelength range, in the form of a pulsed laser to measure distances. Measuring the time it takes for the beam of light to reach the target and return to the transceiver aboard an aircraft makes it possible to determine the distance between the two.

LiDAR enables the collection of highly accurate three-dimensional (x,y,z) data points (so-called "point clouds") to provide detailed information about the shape of the Earth and its surface features.

|

The first experiments using light to determine distances date back to the 1930s, for studying the atmosphere and determining the height of clouds. After the invention of the laser in the 1960s and further developments spurred by military applications (including target acquisition) and investments by NASA (solar system exploration), the technology became sufficiently advanced to produce highly detailed elevation models. Since the public availability of GPS systems combined with devices that measure inertial measurement aboard aircraft (inertial measurement units), lidar systems are often used to collect positionally accurate topographic data. Today, laser scanning technology with its continuous developments is used in a wide range of scientific disciplines and applications: estimating the impact of natural disasters (landslides, lava flows, floods...), monitoring river basins, rivers and floodplains, environmental projects, geomorphology, infrastructure works, archaeological research,.... |